Every security team has a rule they will not turn on. A bot detection rule that fires correctly, flags real abuse, and stays in monitor mode anyway. Not because the detection is wrong. Because nobody can prove the block is safe.

This is the enforcement gap. The distance between seeing an attack and stopping it. It is not a people problem or a process problem. It is an architecture problem. Teams are not too cautious. They are rationally responding to an architecture that makes enforcement unsafe.

Today we are launching Programmable Bot Protection. It brings detection and enforcement together inside the application runtime, and it lets you see exactly what you would block before you block it.

Bots do not look like bots anymore

Bot detection used to be a fingerprinting problem. Browser attributes. JavaScript challenges. Device fingerprints. If a bot could not perfectly mimic a real browser, you could catch it.

AI-powered bots changed the equation. They use real browsers. They solve CAPTCHAs on the first attempt. They rotate identities mid-session. They carry valid device fingerprints, maintain legitimate-looking sessions, and time their requests to blend in with real users.

At the HTTP request layer, these bots are indistinguishable from customers.

The industry response was split across two tools that were never designed to work together. WAFs tried to detect and block at the edge, but they were built for signatures, not behavior. Dedicated bot vendors improved detection with fingerprinting, but handed enforcement back to the WAF. Better signals, same bottleneck. Detection in one place, enforcement in another, and no safe way to connect them.

The deeper issue is structural. A single HTTP request does not contain enough information to distinguish a sophisticated bot from a legitimate user. The signals edge tools rely on (IP reputation, fingerprints, headers, challenge responses) are the exact signals AI-powered bots are designed to defeat. The information needed to identify the bot does not exist in a single request. It exists in the pattern of behavior across requests, sessions, and time.

The only place to see that behavior is inside the application runtime.

Runtime behavioral detection

Programmable Bot Protection runs inside the application runtime. In the live request path. While the request is being processed.

It is built on WebAssembly and runs inside your application without adding latency, without requiring agents, and without sitting outside the request path reconstructing what happened after the fact. It deploys as part of your application, not in front of it.

The system builds behavioral baselines from your actual traffic. Not static signatures. Not vendor rulesets. Learned behavior that adapts as your traffic changes. Flash sale drives 10x volume, baselines adjust. Seasonal patterns shift, thresholds recalibrate. Every request is evaluated against that learned behavior.

Session rotation does not reset the picture. IP cycling does not clear the history. Device fingerprint changes do not start fresh. The behavioral record persists across evasion techniques, because it tracks what the actor does, not what the actor looks like.

What this looks like against real attacks

Take credential stuffing. A single login attempt from an AI-powered bot is indistinguishable from a real customer. The username is valid. The password is plausible. The request comes from a residential IP, a real browser, a clean device fingerprint. At the perimeter, it is a normal login.

Inside the application runtime, the picture is completely different. That session has attempted 400 logins across 12 accounts in 90 minutes, rotating IPs and device fingerprints between each attempt. The behavioral pattern is unmistakable. The individual request is invisible.

That gap between what a single request reveals and what behavioral correlation reveals is the gap the entire bot protection industry has failed to close. Every one of the 21 attack categories in the OWASP Automated Threats framework exploits the same blind spot: each individual request looks legitimate. The abuse only becomes visible when you correlate behavior across sessions, identities, devices, and time.

Safe to block in production

Runtime detection matters. But the real shift is what it makes possible: enforcement you can validate before you commit.

Teams have known about these attacks for years. They have dashboards full of flagged traffic. They know exactly what the problems are. But a false positive on a deposit flow loses a customer permanently. A bad block during a live sporting event is revenue that never comes back. A mistaken enforcement during a product drop costs thousands per second. The gap has never been awareness. It has been confidence.

Staging environments do not close that gap. Staging does not have your real users, your real attacks, your real edge cases, or your 3am traffic during a flash sale. A policy that passes in staging may fail in production.

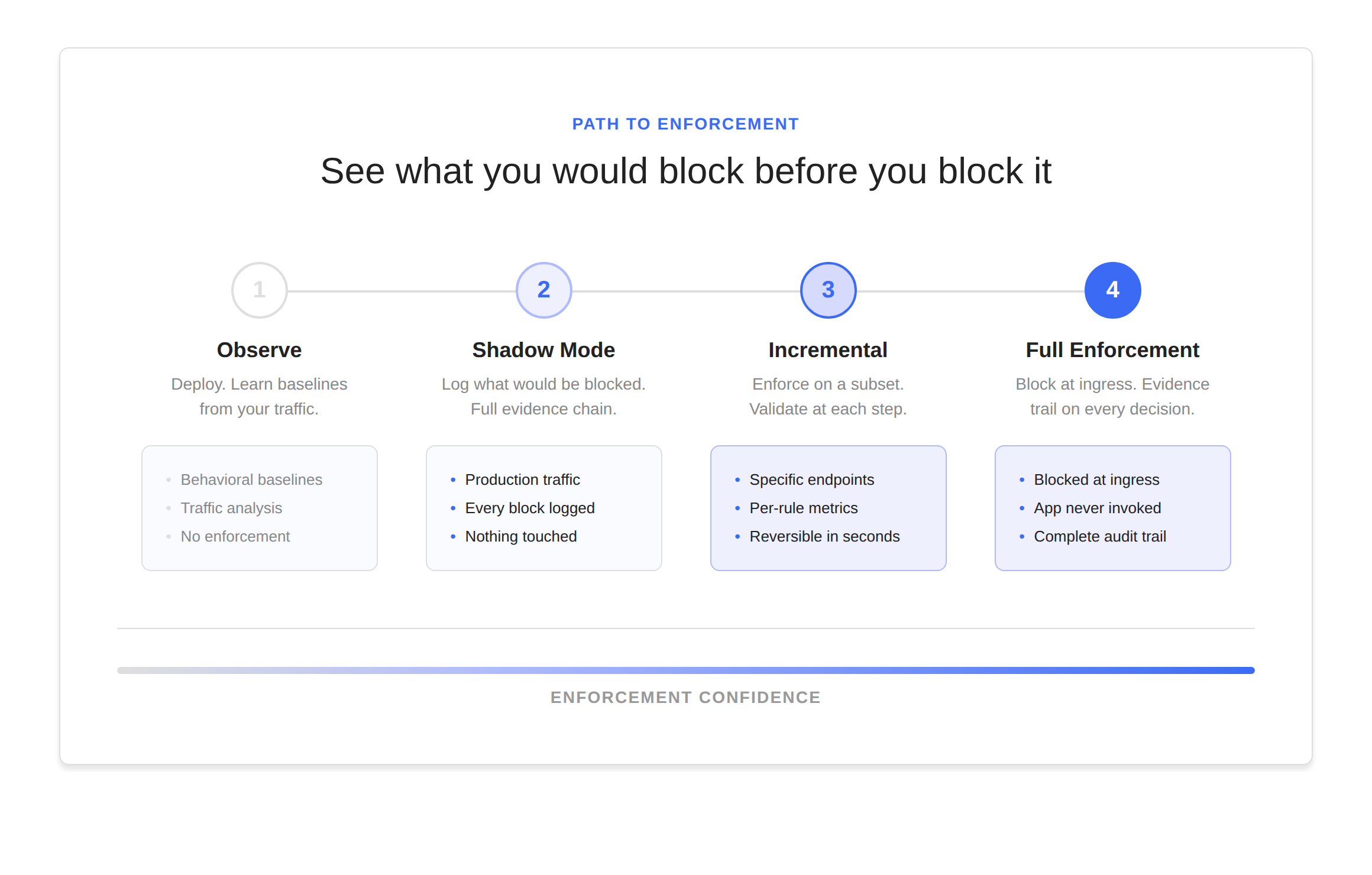

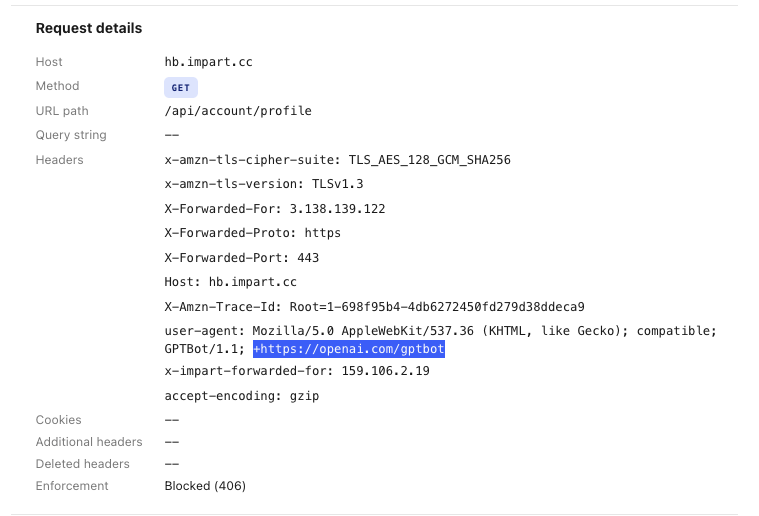

Shadow mode removes the gap. You run Programmable Bot Protection against live production traffic. Nothing is blocked. But everything that would be blocked is logged with full context: what triggered, why, and the complete request details. You validate against your own data. Your own peak patterns. Your own edge cases. You watch it for a day, a week, however long you need.

When you turn enforcement on, it is not a judgment call. It is a decision backed by evidence from your own production environment. Enforcement is incremental. Start with a subset of traffic. Apply to specific endpoints. Validate at each step. Expand with confidence.

Requests are blocked at ingress. Your application is never invoked. Downstream services are never stressed. Every block has a complete evidence trail. Per-rule metrics show execution time, match counts, block counts, error rates, and latency impact in production. Every policy change is reversible in seconds.

Today, 95% of Impart customers actively block in production. In an industry where most teams never move past monitor mode, the difference is not better detection. It is the confidence to enforce.

Your policies, your code, your control

AI-powered bots adapt in real time. Your defenses need to move just as fast.

With most bot protection, adapting means opening a vendor ticket, describing the pattern you are seeing, hoping it gets prioritized, and deploying a change you cannot fully inspect into a system you do not control.

With Programmable Bot Protection, your bot policies are code. Real code. Stored in Git. Deployed through your CI/CD pipeline. Reviewed in pull requests. Rolled back in seconds.

A new attack pattern shows up Tuesday morning. By Tuesday afternoon your team has written a detection rule, tested it against live traffic, reviewed the PR, and deployed it to production. That is the speed this threat requires.

You define what abuse means for your application. You write detection logic that matches how your business actually works. You test policies against real production traffic before they ever enforce. And you evolve your defenses continuously, because the threat is evolving continuously.

This is what programmable means. Full control over your enforcement logic. The ability to inspect, test, version, and change anything, at any time, without asking permission.

What we built and who it is for

If your bot protection enforcement rate is a number you would rather not report on, the problem is not your team. It is the architecture. Detection in one tool. Enforcement in another. Signals too imprecise to trust. No way to prove a block is safe. No way to adapt at the speed the threat demands.

Programmable Bot Protection brings detection and enforcement together inside the application runtime. Behavioral analysis across all 21 OWASP automated threat categories. Shadow mode validation against real production traffic. Policies as code. Per-rule observability. Incremental enforcement.

It is available today as part of the Impart runtime application protection platform.

See what you would block before you block it.

.svg)